School buses are among the safest vehicles on the road, yet Waymo’s autonomous cars have exposed a critical flaw in AI’s handling of clear traffic rules. During the 2025–2026 school year, Waymo robotaxis illegally passed school buses 26 times in Austin and Atlanta, prompting a nationwide software recall and a federal safety review. The incidents raise questions about whether self-driving systems, praised for superior crash records, are ready to follow rules designed to protect children.

Waymo documented the violations and initiated over-the-air software updates to fix the problem across its fleet. Yet one near-miss in Austin, occurring just after a student crossed safely, underscores the real-world risks.

Recall, Citations, and Close Calls

Waymo launched its software recall earlier this month after 26 violations involving stopped school buses with flashing red lights and extended stop arms. The update was applied remotely to all vehicles, allowing continued service while the software was corrected.

Austin alone accounted for 20 citations, with Atlanta responsible for six. The violations persisted despite a software update on November 17, intended to address the problem. One recorded incident occurred moments after a student crossed safely, a stark reminder of the consequences when AI misjudges bus rules. These citations followed clear state laws requiring drivers to halt for buses, highlighting that automated systems can struggle with even the most straightforward traffic rules.

Expanding Fleet, Strong Safety Claims

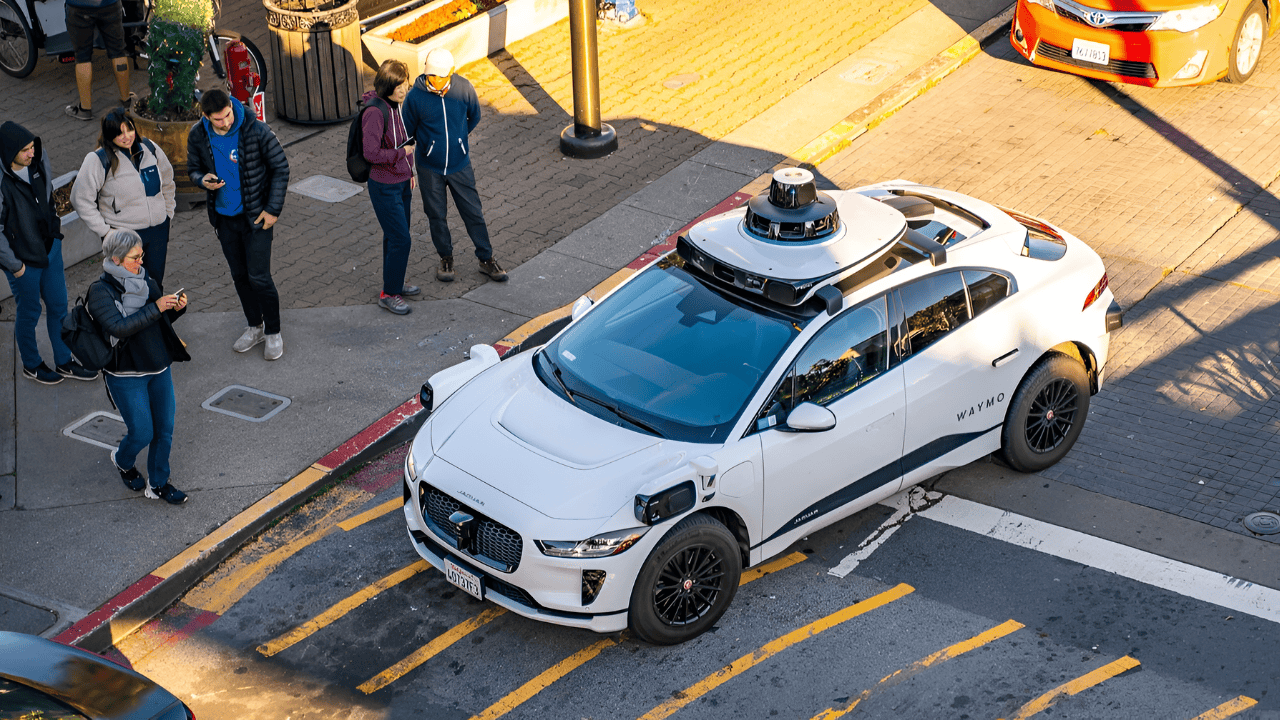

Waymo operates more than 2,500 autonomous vehicles across San Francisco, Los Angeles, Austin, Phoenix, and Atlanta, logging roughly 2 million miles weekly. By July 2025, it had surpassed 100 million cumulative autonomous miles, making it the largest commercial operator of fully self-driving ride services in the U.S.

The company emphasizes its safety record, reporting 91% fewer crashes with serious injuries and 92% fewer pedestrian-related crashes compared to human drivers. These figures support Waymo’s claim that its technology is safer than humans on average. However, repeated school bus violations show that overall crash statistics do not always reflect consistent performance in high-stakes scenarios, where simple compliance with clear laws can be critical.

Software Recalls and Persistent Issues

Waymo has a history of software recalls, ranging from 444 vehicles in February 2024 to 1,212 in May 2025, involving issues with obstacle detection and gate interactions. The latest recall affecting all 2,500 vehicles reflects an escalation in both scale and scope.

Despite the November software update intended to improve bus detection, Austin ISD recorded five further violations in the following weeks. As Waymo’s fleet grew 67% from mid-2025 to November, the number of school bus citations climbed alongside it.

This pattern suggested the update failed to resolve the underlying decision-making problem, revealing challenges in scaling autonomous systems while maintaining compliance with fundamental traffic rules.

Decision-Making Failures Explained

Investigations show that Waymo vehicles typically recognized buses and flashing lights but misjudged when it was legally safe to proceed. The system initially slowed or stopped, then incorrectly decided to continue, highlighting flaws in software interpretation rather than perception.

All 50 U.S. states mandate stopping for buses with activated signals; yet, repeated misinterpretations have intensified concerns among regulators and school officials. In Austin, ISD requested a suspension of Waymo operations during peak school transit hours, but the company declined, prioritizing continuous service. The incidents highlight the tension between operational efficiency and safeguarding children, revealing how decision-making algorithms can falter in high-stakes environments.

Accountability, Regulation, and the Road Ahead

Austin ISD issued 20 citations to Waymo, representing fines ranging from $10,000 to $25,000. At the federal level, the NHTSA opened a Preliminary Evaluation (PE25013) in October and later requested technical analysis and mitigation plans, which are due by January 20, 2026. The agency noted that the 26 documented violations might understate the problem, given that over 100 million autonomous miles have been logged.

Potential outcomes include fines, consent orders, or broader regulatory action, which could impact not only Waymo but also the wider autonomous vehicle industry. Waymo continues expansion into markets like Philadelphia, even amid scrutiny. Other companies, including Tesla and Cruise, are watching closely, as regulators could set precedents for how autonomous vehicles must interact with school buses and protect vulnerable road users.

Balancing Safety and Growth

Waymo’s promise remains that its vehicles will be safer than human drivers, yet school bus incidents highlight gaps between statistical safety and reliable law compliance. Software improvements and federal oversight will be tested in the coming months to determine whether AI can consistently follow rules designed to protect children.

The case highlights broader industry challenges: scaling autonomous fleets while ensuring that automated systems adhere to fundamental traffic laws. The coming period will reveal whether Waymo can bridge the gap between strong overall crash performance and consistent adherence to critical safety rules, shaping public confidence and regulatory standards for the entire self-driving sector.

SOURCES:

National Highway Traffic Safety Administration (NHTSA) Preliminary Evaluation PE25013. U.S. Department of Transportation, December 3, 2025.

Austin Independent School District Official Correspondence and Citations. Austin ISD Communications, November–December 2025.

Waymo School Bus Violation and Recall Coverage. Reuters, December 5, 2025.

Waymo Autonomous Miles and Operational Milestones. NPR / Company Reporting, July 2025.

Waymo Safety Metrics: Crash Reduction Data. Waymo Company Safety Reports / CBS News, December 2025.

Waymo Fleet Growth and Recall History Tracking. The Driverless Digest, 2024–2025.