On October 20, 2025, people around the world experienced a sudden digital shutdown. Major websites and apps refused to load, from banking platforms and games to government sites and smart devices; nearly everything was affected.

Within hours, reporters and analysts confirmed the source: Amazon Web Services (AWS), the largest cloud computing provider, had encountered a massive failure. This single technical issue temporarily disrupted over 60 primary services worldwide, exposing how deeply the internet depends on one company’s infrastructure.

AWS, which supports roughly one-third of all online platforms, was described as the “invisible backbone” of modern life, and its brief collapse showed just how fragile that backbone can be.

Downdetector Erupts

By 8:00 AM in the UK, outage notifications poured into Downdetector, a website that tracks service disruptions. Reports skyrocketed from users of Snapchat, Fortnite, Robinhood, and countless other services.

Within half an hour, terms like “AWS down” were trending globally. The difference this time was scale; it wasn’t one or two websites but dozens failing together.

This synchronized breakdown across unrelated systems was a clear sign that a shared provider, rather than isolated technical faults, was to blame.

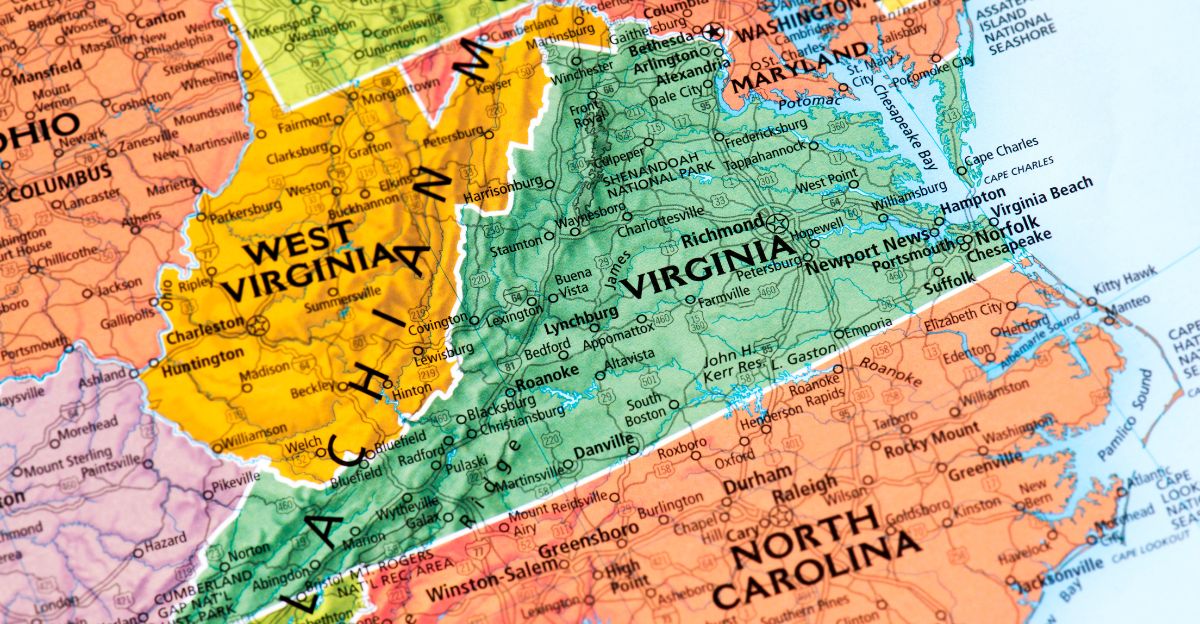

Virginia’s Digital Kingdom

The problem traced back to AWS’s US-EAST-1 region, its original and most critical cluster of data centers in Northern Virginia. This region, operational since 2006, processes around 30% of AWS’s total traffic.

Because so many companies rely on it for speed and cost advantages, any disruption there can ripple worldwide. When US-EAST-1 falters, everything from corporate websites to mobile apps and payment systems wavers with it.

This concentration of digital traffic became the epicenter of the global outage.

Cloud Dominance

Amazon Web Services leads the global cloud market with a 30–32% share, ahead of Microsoft Azure (20–23%) and Google Cloud (10–13%). In early 2025 alone, AWS generated over $29 billion in quarterly revenue.

Over half its user base is in North America, where businesses depend heavily on its infrastructure. AWS powers millions of clients, ranging from major governments and corporations to small developers.

When its servers malfunctioned that October morning, the effect spanned continents, industries, and economies, underlining how connected every digital system has become.

The DNS Revelation

At 9:27 AM BST, AWS confirmed the culprit: a DNS failure in its DynamoDB service, a database that supports countless apps. DNS acts as the “phonebook” of the web, translating domain names into IP addresses.

When it broke down, software around the world couldn’t find where to send data. Thousands of platforms, like financial apps and gaming servers, froze.

In total, 28 AWS products were hit, with users describing the event as “like someone pulled the plug on the internet.”

Banking in Blackout

The outage hit financial services first. Lloyds Bank customers in the UK couldn’t log in, Coinbase and Robinhood traders were locked out, and Venmo transactions stalled.

Coinbase reassured users their funds were safe, but that didn’t stop panic. For banks processing billions in daily transfers, each minute offline meant both financial losses and regulatory stress.

The event revealed how modern banking is only as strong as the cloud it runs on.

Gamers Go Dark

Popular online games like Fortnite, Roblox, and Clash Royale went offline almost simultaneously. With login systems, game servers, and player data hosted on AWS, millions found themselves disconnected.

Gaming companies rely on real-time connectivity, so interruptions cost huge sums in lost playtime and microtransactions.

Social media quickly filled with frustrated players comparing experiences from around the globe. The entertainment industry’s digital heartbeat had skipped.

Government Disruption

Even government websites weren’t immune. The UK’s HMRC tax portal and multiple airlines (United, Delta) faced downtime. Travelers couldn’t check in, and citizens couldn’t access critical online services.

This raised serious questions and concerns about outsourcing public infrastructure to private cloud giants.

The outage didn’t just highlight convenience issues; it demonstrated that essential public systems now depend on the uptime of a single commercial provider.

Market Tremors

Investors reacted quickly. Amazon’s stock dipped slightly, but analysts worried more about AWS’s reputation for reliability. In 2024, risk firm Parametrix estimated that a 24-hour AWS regional outage could cause $3.4 billion in losses for Fortune 500 companies.

Though October’s crisis lasted only a few hours, early projections placed the real cost in the hundreds of millions.

Financial, retail, and healthcare sectors, especially those with 24/7 operations, suffered the worst disruptions.

The Architecture Trap

AWS’s power lies in its interconnected microservices — but that strength is also its weakness. Services like API Gateway, Lambda, and CloudWatch all depend on DynamoDB databases to store and read data.

When DynamoDB broke, these systems fell like dominoes. The architecture, designed to be efficient and scalable, escalated the damage.

This “ripple” effect meant minor internal glitches could escalate into world-scale meltdowns, which is precisely what happened on October 20.

Smart Homes, Dumb Situation

Consumers discovered the irony of “smart” homes that couldn’t function. Amazon Ring doorbells, Alexa voice assistants, and other Internet-of-Things devices stopped responding.

Homeowners were locked out, unable to unlock smart locks or view security feeds. Tools meant to maximise convenience as well as safety suddenly caused frustration and risk.

When the cloud disappears, connected devices lose their “brains,” reminding users how dependent they’ve become on always-on connectivity.

AWS Responds

Within a few hours, AWS said its engineers were “working across multiple paths toward full recovery.”

By 10:27 BST, it announced “significant signs of improvement,” reassuring users that the problem was internal, not a cyberattack . Speculation about hacking faded as technical updates came in.

AWS slowly restored its services to normal over the next few hours, but the outage had already inflicted economic and reputational damage.

The Cyberattack Myth

During the outage, social media users flooded platforms with conspiracy theories suggesting Chinese hackers had taken down AWS.

However, experts quickly dismissed these, confirming no evidence of an external attack. AWS’s past issues had always stemmed from configuration errors or software mismanagement, not cyberwarfare.

The rumor outbreak, however, underscored another danger: how rapidly misinformation spreads during chaos when people search for immediate answers.

Social Media Erupts

Once users regained access, memes and jokes spread faster than the news itself.

Photos of stressed IT engineers, captions like “Amazon Web Services right now,” and sarcastic comments about the internet’s overreliance on one company dominated platforms like X and Reddit.

Even Elon Musk joined in, boasting that his own platform “doesn’t rely on AWS.” Humor became both a coping mechanism and a commentary on digital centralization.

The Concentration Warning

The incident reignited debate about cloud concentration risk, the danger of so much infrastructure sitting under a few corporate umbrellas.

Gartner ranked over-dependence on one provider as a top five business threat in 2025. Regulators in Europe and the US voiced the same concern: if one region of one provider fails, entire sectors halt.

The October outage turned this from an academic discussion to a real-world warning.

Regulatory Reckoning

Global regulators are already responding. The EU enforced its Digital Operational Resilience Act (DORA) in 2022, requiring financial institutions to report their dependencies on cloud providers.

In 2024, AWS launched a European “Sovereign Cloud” to keep European data local, though US ownership still worries lawmakers. In the US, the SEC is pressing companies to disclose their cloud reliance to investors.

Governments now see internet resilience not just as a technical policy but as national security.

Multi-Cloud Strategy

Experts say the solution lies in “multi-cloud” strategies — using several cloud providers simultaneously to reduce risk. Big firms like Netflix already mix AWS and Google Cloud for redundancy.

A Flexera report from 2024 found 90% of enterprises attempting multi-cloud setups. Yet many fail to implement full automatic switchovers during outages because the cost of maintaining duplicate systems is so high.

For smaller firms, proper multi-cloud protection remains financially unrealistic, leaving them vulnerable to single-point breakdowns.

Misinformation Wildfire

Even after AWS’s clarification, false stories kept circulating, claims that the outage was a government test, a hack, or intentional sabotage .

Crypto communities spread rumors that Coinbase lost funds (it hadn’t).

Reddit threads suggested AWS employees or AI systems were to blame. Fact-checkers spent the day countering these myths.

The chaos showed how disruption in one infrastructure system creates a second, parallel crisis: the spread of disinformation.

Déjà Vu All Over

AWS outages aren’t new. In 2020, 2021, and 2022, US-EAST-1 experienced similar breakdowns triggered by network updates and storage overloads.

Each time, AWS promised improvements, yet legacy complexity makes perfect reliability almost impossible. With every fix comes more infrastructure, and more chances for something else to fail.

October 2025 wasn’t an isolated freak accident; it was a warning that the next “internet blackout” is only a matter of time.

The Verdict

The October 20 outage proved how dependent our digital civilization has become on just a few data centers.

A single DNS failure in one AWS region briefly froze banking systems, government portals, video games, and smart homes at once. AWS managed to restore functionality within hours, but the underlying risk persists.

Businesses can either spend billions on backup cloud infrastructure or accept dependence on a few dominant providers.