Anthropic, the fast-growing AI startup, recently hit the headlines with a mammoth settlement. The company is now valued at about $183 billion after a $13 billion funding round.

It boasts over 300,000 business customers and has seen annual revenue skyrocket from roughly $1 billion at the start of 2025 to more than $5 billion by August.

But in September 2025, Anthropic agreed to pay an unprecedented sum to end a copyright lawsuit – a deal that could reshape the entire AI industry.

Legal Earthquake

By mid-2025, a legal “earthquake” had shaken the AI sector. Dozens of copyright lawsuits were filed against AI developers, accusing them of stealing training data.

After some dismissals, about 45 lawsuits remain active, naming companies like OpenAI, Meta, Microsoft, Google, Anthropic, and Nvidia.

Nearly every major AI company is embroiled in this fight. Legal observers call it the largest coordinated challenge to AI data practices in U.S. history – a tsunami of litigation that threatens an industry projected to reshape the global economy.

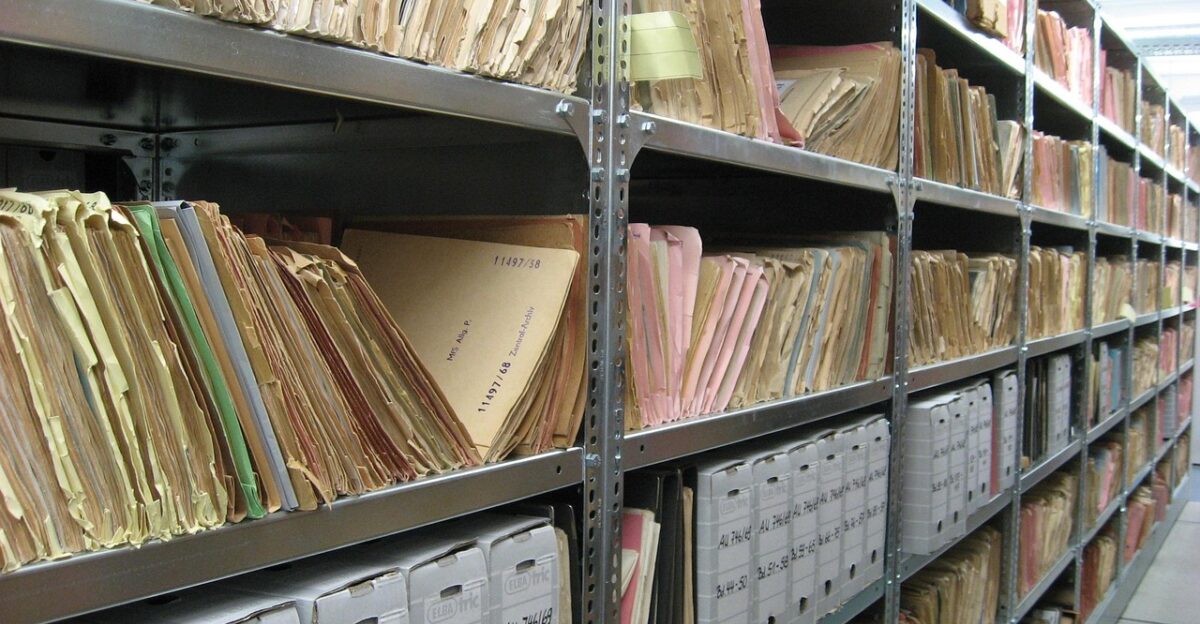

Shadow Archives

At the heart of these cases are the so-called “shadow libraries” of the internet. Sites like Library Genesis (LibGen) accumulate vast, unlicensed caches of books, articles, and papers. LibGen alone is estimated to hold well over 2.4 million non-fiction books (and millions more in fiction, comics, etc.) plus about 80 million academic articles.

In total, the collection spans hundreds of terabytes of data.

These pirate repositories have long flown under the radar, but AI developers have reportedly used them as cheap sources of training material. The Anthropic case has thrust these shadow archives into the spotlight, as publishers and law enforcement push back.

Mounting Pressure

U.S. District Judge William Alsup has become a pivotal figure in this saga. A veteran jurist famous for self-teaching programming languages to tackle tech cases, Alsup drew national attention when he weighed in on AI training. In June 2025, he ruled that Anthropic’s use of legitimately purchased books to train its Claude model was “among the most transformative” fair use.

However, he rejected fair use for the 7+ million pirated books Anthropic had downloaded.

His nuanced finding – blessing proper training data but banning stolen libraries – set the stage for a high-stakes trial and kept enormous damages on the table. Alsup’s blend of technical insight and legal authority means his actions could reshape how AI companies gather data across the board.

Record-Breaking Settlement

Here’s where it gets interesting: on Sept. 5, 2025, Anthropic announced it would pay $1.5 billion to settle the class-action suit. That works out to about $3,000 per pirated book (covering roughly 500,000 titles).

As part of the deal, Anthropic must also destroy any pirated copies in its training datasets.

Lawyers for the authors called this a “landmark” outcome. Justin Nelson, co-lead counsel for the writers, hailed the pact as “a powerful message … that taking copyrighted works from these pirate websites is wrong”.

Geographic Impact

The settlement has a global reach. It covers about 500,000 works in the identified libraries, and any author of those works is eligible for $3,000 per title. U.S. writers likely make up the largest slice of the class, but hundreds of thousands of books have an international origin.

Authors in countries with robust copyright protections – from the UK and Canada to Australia and beyond – will share in the compensation. In other words, this is not just an American story.

It underscores how AI’s appetite for data crosses borders: a novel written in Australia or Canada that got swept up in LibGen is as much covered by the settlement as one written in California.

Human Stories

Many authors whose livelihoods were affected have spoken out. Lead plaintiff Andrea Bartz (a thriller novelist) said the news was “an initial, corrective step in a critical battle”.

Fellow writer Kirk Johnson added that the settlement marked “the beginning of a fight on behalf of humans” who don’t want to see creative work sacrificed for AI.

Those voices reflect relief and vindication. Authors Guild president Mary Rasenberger summed it up: “This is an excellent result for authors… sending a strong message to the AI industry”. For many writers – from big publishers to indie novelists – it feels like a moment of justice, after discovering their books had been used without permission.

Industry Ripples

The Anthropic news immediately rippled through the tech world. Last year, Microsoft made headlines by licensing books through HarperCollins at $5,000 per title as a way to pay authors for AI training use.

The Anthropic deal’s $3,000 per-work rate is notably lower, suggesting pirated content was valued as a “discounted” source.

Observers note that big AI firms are now feverishly auditing their training datasets. For example, after Anthropic’s reveal, industry insiders report competitors are scanning their libraries for any unauthorized content and reopening talks with publishers to secure legit licenses.

Market Forces

Anthropic’s finances appear strong enough to swallow this settlement. As the company disclosed in August, its revenue shooting from $1 billion to $5 billion in just 8 months made it “one of the fastest-growing technology companies in history”.

Its customer base is massive – over 300,000 businesses are now paying for Anthropic’s AI services.

Claude’s new “Claude Code” developer tool is generating over $500 million annual run-rate revenue in only three months. All this growth has given Anthropic a huge war chest.

Judicial Roadblock

Just three days after the settlement announcement, Judge Alsup called a halt to preliminary approval. He lambasted the deal as “nowhere close to complete”, noting that crucial details were missing. In a Sept. 8 hearing, the judge demanded the parties produce the full list of each copyrighted work and author by mid-September, warning that without clarity, the deal couldn’t proceed.

Alsup was especially focused on protecting the authors: he questioned whether too much of the $1.5B might end up as legal fees instead of payments to writers.

He even warned that in many class actions, class members “get the shaft” while attorneys walk away rich.

Internal Tensions

Reports suggest Anthropic’s own lawyers had long feared the worst-case scenario. Under U.S. copyright law, statutory damages can reach $150,000 per infringed work for willful violations. At that rate, the 500,000-book claim could theoretically swell into the hundreds of billions, an existential threat.

Internal documents (later leaked) even acknowledged the company had knowingly used pirated material, creating a potential corporate crisis.

With those risks in mind, the $1.5B deal looks like aggressive risk management. Settling now limits the liability to a fraction of the maximum penalty, buying Anthropic legal peace of mind.

Leadership Response

CEO Dario Amodei (a former OpenAI research chief) and Anthropic’s leadership tried to spin the settlement as responsible. The company issued statements emphasizing safety and accountability. Anthropic’s lawyers stress that the firm “remains committed to developing safe AI systems” that help organizations without compromising ethical standards.

Employees at headquarters in San Francisco and beyond were briefed on “AI ethics” initiatives, including internal audits of data usage.

In a telltale sign, Amodei personally highlighted this move as part of Anthropic’s “constitutional AI” mission – essentially, suggesting that even after admitting nothing, the firm would tighten its practices. The leadership is framing the settlement as a step toward doing the “right thing” in AI development.

Strategic Pivot

With court approval pending, Anthropic quickly shifted strategy. The company announced new data provenance tracking measures and pledged to establish formal licensing partnerships with content owners. For example, Anthropic said it would implement technical “kill-switches” to purge any contaminated data from future model training.

Lawyers see this as a new industry norm: AI firms that once scraped the web willy-nilly must now treat data sources like audited supply chains.

As one law review analysis notes, the clear takeaway for AI developers is to “document data sources meticulously and ensure the legitimate acquisition of data”. Many companies have quietly started signing agreements or licensing deals rather than risk another $1.5B scenario.

Expert Skepticism

Yet not everyone is satisfied. Legal experts point out that $3,000 per author is modest given the stakes. One JD Supra commentary warned that $1.5B is “only a fraction” of what companies spend on AI development, and far below the possible statutory maximum. From the creators’ perspective, the award might not fully redress years of unpaid use.

Some analysts are blunt: this deal could be treated as a mere “R&D expense” by tech companies.

They caution that unless courts impose harsher penalties, rivals might gamble that training on pirated content and later negotiating settlements is an acceptable path. Without stronger legal deterrents, the settlement might inadvertently signal that big tech can continue profiting from creative works – so long as it has deep pockets to pay up later.

Future Framework

In sum, the Anthropic agreement is setting new informal rules for AI copyright. Legal analysts say it will become a benchmark for dozens of similar cases. Future plaintiffs will almost certainly point to $3,000-per-book as the starting point for negotiations.

In the corporate world, the precedent has an immediate effect: enterprise customers of AI (for example, banks or hospitals buying large LLM tools) now insist on firmer indemnification clauses and proof that vendors obtained or licensed all their training data.

Companies selling AI services are being forced to treat copyright compliance as a core business requirement. The framework emerging from this case will influence AI development for years – effectively redefining what it means to “build an AI ethically.”

Political Implications

This high-profile case has caught Washington’s attention. Tech giants, fearing a chilling of innovation, have been lobbying the Biden administration to clarify copyright rules for AI. Reports say OpenAI and Google have urged regulators to declare AI training on any data a “fair use” exception, warning that if the U.S. restricts itself, China will seize the lead.

For example, OpenAI told officials: “If [China’s] developers have unfettered access to data and American companies are left without fair use access, the race for AI is effectively over.”.

The Anthropic settlement throws a wrench into those arguments. By tying massive liability to unauthorized use, it reinforces the idea that broad exemptions could invite costly lawsuits.

International Reverberations

The Anthropic case is also resonating abroad. In Europe, for example, a coalition of French publishers sued Meta in Paris this year, accusing it of “massive use of copyrighted works without authorization” to train AI models.

Those publishers are demanding that Meta purge any such content – echoing the U.S. authors’ concerns. And under the EU’s upcoming AI Act, companies will soon be legally required to respect IP rights and document training data sources.

As one French industry leader put it, using pirated works for AI is tantamount to cultural “parasitism”.

Enforcement Evolution

Beyond the courtroom, enforcement against shadow libraries has stepped up. In summer 2025, major academic publishers (Elsevier, Springer, Wiley, ACS, Taylor & Francis) obtained a series of DMCA subpoenas compelling Cloudflare to identify the operators of Z-Library, Library Genesis, and other pirate sites.

This follows years of pressure: in late 2022, FBI agents in Argentina arrested two alleged operators of Z-Library, seizing domain names and servers. U.S. prosecutors denounced these sites as running “stolen intellectual property” operations.

Such legal action against pirates helps explain why Anthropic chose to settle. When copyright owners coordinate with law enforcement, it tightens the noose on companies relying on illicit content.

Cultural Shift

Culturally, the case has sparked a reevaluation of the “free knowledge” ethos. For years, many internet users (especially younger ones) justified LibGen and Z-Library as democratizing education. TikTok videos and the hashtag #ZLibrary were shared by millions, praising instant access to books.

But the arrests and lawsuits have cooled that enthusiasm. An art-world columnist notes that Z-Library’s resurgence this year prompted fierce debate: advocates touting “free knowledge for everyone” now clash with critics calling it blatant copyright theft.

As a generation that grew up seeing content circulate freely comes of age, there’s friction: today’s students discover that unfettered data access may carry ethical costs.

New Paradigm

Ultimately, the $1.5 billion settlement is being hailed as a watershed for the AI industry. It signals that the era of “anything goes” in data gathering is over. From now on, companies must treat data provenance and licensing as strategic priorities, not afterthoughts.

As one commentator wrote, this deal has been seen as “the A.I. industry’s Napster moment,” potentially “redefining the legal landscape” for AI models.

Judges and legislators will watch closely as Alsup considers final approval in late September. If this agreement stands, it will become the template for resolving the coming wave of copyright fights.