Millions of YouTube subscribers woke up this week to find their favorite movie trailer channels vanished, replaced by a stark, grey “page unavailable” error message. The sudden disappearance of Screen Culture and KH Studio wasn’t a glitch but a targeted execution—YouTube’s most decisive strike yet against the “AI slop” ecosystem.

In a single coordinated action, the platform wiped out over one billion accumulated views, instantly demonetizing a content empire that had successfully hijacked the marketing campaigns of Hollywood’s biggest blockbusters for years.

A Calculated Takedown

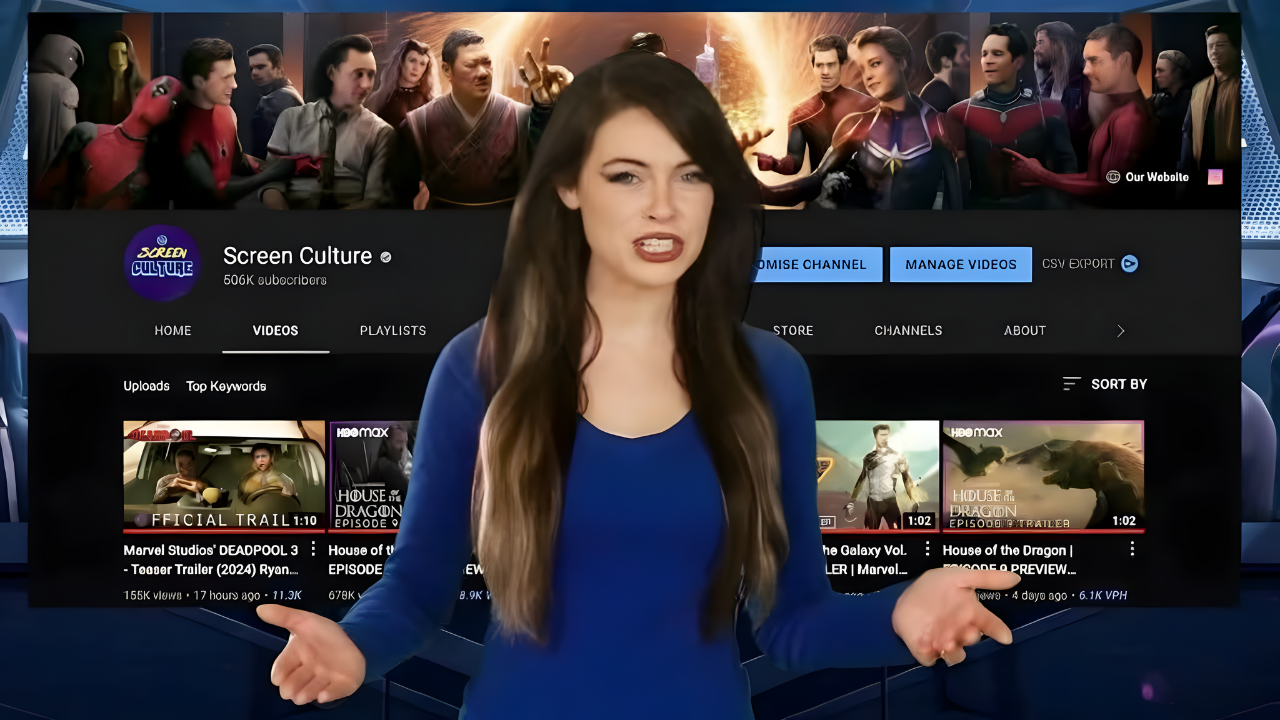

The terminations represent the climax of a high-stakes battle between platform integrity and algorithmic exploitation. Screen Culture, a channel based in India with 1.4 million subscribers, and KH Studio, a U.S.-based operation with nearly 700,000 followers, were permanently banned for violating YouTube’s policies on spam and deceptive practices.

Their business model was simple but devastatingly effective: using generative AI to create convincing fake trailers for non-existent movies, effectively siphoning traffic away from legitimate studio releases.

Hijacking the Algorithm

For years, these channels operated with impunity, gaming YouTube’s search algorithms to dominate results for highly anticipated films. When fans searched for news on upcoming Marvel or DC projects, they were often funneled to Screen Culture’s fabricated trailers instead of official sources.

The scale was industrial; investigation revealed that Screen Culture uploaded 23 different versions of a single fake trailer for The Fantastic Four: First Steps, flooding the zone so thoroughly that authentic content struggled to compete.

The Mechanism of Deception

The deception relied on a sophisticated blend of high-tech tools and low-tech manipulation. Creators used AI software to generate realistic visuals of actors in roles they had never played—Henry Cavill as James Bond or Leonardo DiCaprio in Squid Game.

These visuals were spliced with existing footage and overlaid with AI-synthesized voiceovers. The result was a product just convincing enough to fool casual viewers, generating millions of clicks before the audience realized they were watching a fabrication.

Deceptive Metadata Exposed

Crucially, the ban wasn’t just about the use of AI, but how it was packaged. The channels systematically manipulated metadata—titles, tags, and thumbnails—to disguise their content as official studio releases. By omitting clear labels like “concept” or “fan-made” from their titles, they crossed the line from creative expression into deliberate fraud.

YouTube’s enforcement action specifically cited “misleading metadata” as a primary reason for the termination, signaling a new standard for content transparency.

A Pattern of Defiance

This wasn’t the first time YouTube had intervened. In March 2025, following an exposé by industry trade publication Deadline, the platform initially demonetized both channels, cutting off their ad revenue. At the time, the creators scrambled to comply, adding disclaimers to their video titles to frame their work as “parody” or “concept art.”

This temporary pivot allowed them to regain their status in the YouTube Partner Program, but the compliance was merely a tactical retreat.

The Fatal Error

The channels’ downfall came when they grew bold again. In the months leading up to December, both Screen Culture and KH Studio quietly removed the disclaimers that had saved them, reverting to their original, deceptive titles to boost click-through rates.

This calculated decision to prioritize engagement over transparency proved fatal. YouTube interpreted the regression not as a mistake, but as a willful violation of the “second chance” they had been granted.

Stricter Policy Enforcement

The ban also reflects YouTube’s evolving rulebook. In July 2025, the platform updated its “inauthentic content” policy to explicitly address the rise of generative AI. The new rules require creators to disclose when realistic content is significantly altered or synthetic.

By stripping away their disclosures, the channels placed themselves in direct opposition to these stricter standards, forcing YouTube’s hand to protect the integrity of its ecosystem.

Disney Enters the Arena

The timing of the takedown points to a powerful external catalyst: Disney. Just one week before the channels went dark, the entertainment giant sent a blistering cease-and-desist letter to Google.

Disney demanded an immediate halt to the proliferation of AI-generated content that infringed on its intellectual property, citing the “massive scale” of unauthorized videos featuring its characters on YouTube.

The Billion-Dollar Ultimatum

Disney’s legal pressure was accompanied by a strategic maneuver that reshaped the industry landscape. On the same day they threatened Google, Disney announced a landmark $1 billion partnership with OpenAI.

This deal licenses Disney’s characters for use in OpenAI’s Sora video generator, effectively bifurcating the market into “authorized” AI partners (like OpenAI) and “unauthorized” platforms (like YouTube) that host infringing content.

Google’s Strategic Concession

Faced with this pincer movement—a partnership with a rival AI firm and a legal threat from its biggest content partner—Google appears to have capitulated.

The termination of the fake trailer channels serves as a peace offering to Hollywood, a tangible demonstration that YouTube is willing to enforce copyright protection even when it means sacrificing millions of views and the associated ad revenue.

The “AI Slop” Epidemic

The problem extends far beyond just two channels. Industry analysts describe a rising tide of “AI slop”—low-quality, mass-produced content designed solely to harvest ad impressions.

A recent study by Kapwing found that AI-generated spam now accounts for a significant percentage of daily uploads. These videos, ranging from fake news to automated celebrity gossip, clog search results and degrade the user experience for everyone.

Economic Incentives Persist

Despite the bans, the economic drivers of fake content remain powerful. AI tools have democratized high-end video production, allowing a single creator to produce content that previously required a studio budget.

As long as YouTube’s monetization program pays for views regardless of quality, creators will be incentivized to test the boundaries of what is permissible, creating a perpetual game of whack-a-mole for moderators.

A Victory for Creators

For legitimate content creators, the crackdown is a long-overdue victory. “The monster was defeated,” one prominent YouTuber remarked, capturing the relief of a community tired of competing against bots.

Authentic movie trailers, genuine reviews, and original fan theories can now reclaim their rightful place in search results, no longer buried under an avalanche of deceptive, algorithmically optimized noise.

The Verification Challenge

Moving forward, the challenge for YouTube will be verification. Distinguishing between a malicious fake trailer and a creative fan edit is becoming increasingly difficult as AI tools improve.

The platform will likely need to invest in more sophisticated detection systems, potentially using watermarking technologies like Google’s own SynthID to automatically flag AI-generated content before it gains traction.

The Role of Viewers

Viewers also play a critical role in this new ecosystem. The success of Screen Culture proved that audiences are hungry for content, even if it’s fake.

To combat deceptive practices effectively, platforms may need to empower users with better reporting tools, allowing the community to flag misleading metadata and AI-generated misinformation more easily than the current system permits.

Industry-Wide Ripple Effects

The terminations set a precedent that will ripple across the social media landscape. Competitors like TikTok and Instagram, which also struggle with AI-generated spam, will be watching closely.

YouTube’s decisive action establishes a new industry standard: platforms can no longer claim neutrality when their algorithms are actively promoting fraud. Liability for AI-generated content is shifting from the creator to the host.

The Future of Marketing

For Hollywood studios, this marks a turning point in digital marketing. The ability to control the narrative around a film’s release is crucial. By successfully pressuring YouTube to remove these “parasitic” channels, studios have regained control over their intellectual property.

Future marketing campaigns will likely include aggressive monitoring and takedown protocols for AI fakes as a standard operating procedure.

A New Era of Authenticity?

Ultimately, the “defeat of the monster” signals a potential return to authenticity. As the novelty of AI-generated spectacles wears off and platforms crack down on deception, there is a renewed premium on human creativity.

The digital landscape is correcting itself, prioritizing genuine connection and verified information over the hollow engagement of synthetic media.

About the Companies

YouTube is a global video-sharing platform owned by Google, a subsidiary of Alphabet Inc. It is the world’s second-most visited website, hosting billions of videos. The Walt Disney Company is a leading diversified international family entertainment and media enterprise.

OpenAI is an AI research and deployment company dedicated to ensuring that artificial general intelligence benefits all of humanity. Screen Culture and KH Studio were independent YouTube channels specializing in movie trailers.

Sources:

“YouTube Shuts Down Major Channels Amid Controversy With Videos Now Seen by Millions, ‘The Monster Was Defeated’.” MSN / Tech & Entertainment, Dec 2025.

“YouTube Now Shutting Down Channels Posting AI Slop.” Futurism, 20 Dec 2025.

“Disney Accuses Google of Using AI to Engage in Copyright Infringement.” Variety, 11 Dec 2025.

“Disney’s $1 billion deal with OpenAI will bring characters to Sora.” Mashable, 11 Dec 2025.

“YouTube Monetization Policy Update (July 2025).” Fliki / YouTube Creator Blog, July 2025.

“Study finds AI slop videos spreading fast across YouTube.” BetaNews / Kapwing, 3 Dec 2025.